[Last Updated on June 2nd, 2025]

What’s the overlap between AI Search vs traditional search results optimization?

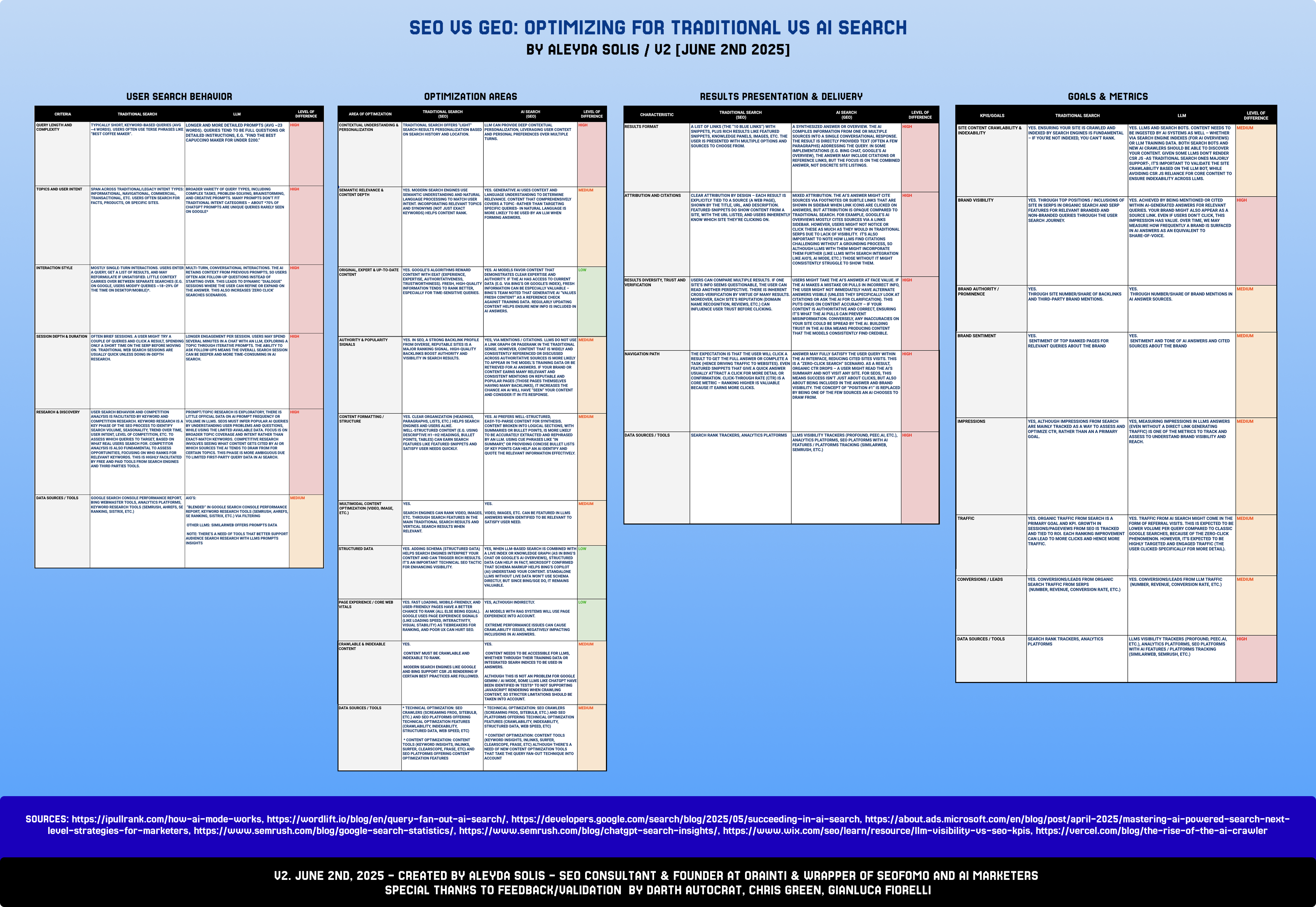

To help establish the major differences, and those areas with the highest overlap, I’ve created a comparison (which I keep updated) going through search behavior, areas of optimization, results presentations & delivery, as well as KPIs to track and goals to achieve.

Let’s go through each of the areas specified above to assess the differences and level of overlap:

User Search Behavior

User search behavior is one of the aspect that changes the most between traditional search engines vs LLMs:

|

QUERY LENGTH AND COMPLEXITY |

TYPICALLY SHORT, KEYWORD-BASED QUERIES (AVG ~4 WORDS). USERS OFTEN USE TERSE PHRASES LIKE “BEST COFFEE MAKER”. |

LONGER AND MORE DETAILED PROMPTS (AVG ~23 WORDS). QUERIES TEND TO BE FULL QUESTIONS OR DETAILED INSTRUCTIONS, E.G. “FIND THE BEST CAPUCCINO MAKER FOR UNDER $200.” |

|

|

SPAN ACROSS TRADITIONAL/LEGACY INTENT TYPES: INFORMATIONAL, NAVIGATIONAL, COMMERCIAL, TRANSACTIONAL, ETC. USERS OFTEN SEARCH FOR FACTS, PRODUCTS, OR SPECIFIC SITES. |

BROADER VARIETY OF QUERY TYPES, INCLUDING COMPLEX TASKS, PROBLEM-SOLVING, BRAINSTORMING, AND CREATIVE PROMPTS. MANY PROMPTS DON’T FIT TRADITIONAL INTENT CATEGORIES – ABOUT *70% OF CHATGPT PROMPTS ARE UNIQUE QUERIES RARELY SEEN ON GOOGLE* |

||

|

MOSTLY SINGLE-TURN INTERACTIONS. USERS ENTER A QUERY, GET A LIST OF RESULTS, AND MAY REFORMULATE IF UNSATISFIED. LITTLE CONTEXT CARRIES OVER BETWEEN SEPARATE SEARCHES (E.G. ON GOOGLE, USERS MODIFY QUERIES ~18–29% OF THE TIME ON DESKTOP/MOBILE)*. |

MULTI-TURN, CONVERSATIONAL INTERACTIONS. THE AI RETAINS CONTEXT FROM PREVIOUS PROMPTS, SO USERS OFTEN ASK FOLLOW-UP QUESTIONS INSTEAD OF STARTING OVER. THIS LEADS TO DYNAMIC “DIALOGUE” SESSIONS WHERE THE USER CAN REFINE OR EXPAND ON THE ANSWER. THIS ALSO INCREASES ‘ZERO CLICK’ SEARCHES SCENARIOS. |

||

|

OFTEN BRIEF SESSIONS. A USER MIGHT TRY A COUPLE OF QUERIES AND CLICK A RESULT, SPENDING ONLY A SHORT TIME ON THE SERP BEFORE MOVING ON. TRADITIONAL WEB SEARCH SESSIONS ARE USUALLY QUICK UNLESS DOING IN-DEPTH RESEARCH. |

LONGER ENGAGEMENT PER SESSION. USERS MAY SPEND SEVERAL MINUTES IN A CHAT WITH AN LLM, EXPLORING A TOPIC THROUGH ITERATIVE PROMPTS. THE ABILITY TO ASK FOLLOW-UPS MEANS THE OVERALL SEARCH SESSION CAN BE DEEPER AND MORE TIME-CONSUMING IN AI SEARCH. |

||

|

USER SEARCH BEHAVIOR AND COMPETITION ANALYSIS IS FACILITATED BY KEYWORD AND COMPETITION RESEARCH. KEYWORD RESEARCH IS A KEY PHASE OF THE SEO PROCESS TO IDENTIFY SEARCH VOLUME, SEASONALITY, TREND OVER TIME, USER INTENT, LEVEL OF COMPETITION, ETC. TO ASSESS WHICH QUERIES TO TARGET, BASED ON WHAT REAL USERS SEARCH FOR. COMPETITOR ANALYSIS IS ALSO FUNDAMENTAL TO ASSESS OPPORTUNITIES, FOCUSING ON WHO RANKS FOR RELEVANT KEYWORDS. THIS IS HIGHLY FACILITATED BY FREE AND PAID TOOLS FROM SEARCH ENGINES AND THIRD PARTIES TOOLS. |

PROMPT/TOPIC RESEARCH IS EXPLORATORY, THERE IS LITTLE OFFICIAL DATA ON AI PROMPT FREQUENCY OR VOLUME IN LLMS. SEOS MUST INFER POPULAR AI QUERIES BY UNDERSTANDING USER PROBLEMS AND QUESTIONS, WHILE USING THE LIMITED AVAILABLE DATA. FOCUS IS ON BROADER TOPIC COVERAGE AND INTENT RATHER THAN EXACT-MATCH KEYWORDS. COMPETITIVE RESEARCH INVOLVES SEEING WHAT CONTENT GETS CITED BY AI OR WHICH SOURCES THE AI TENDS TO DRAW FROM FOR CERTAIN TOPICS. THIS PHASE IS MORE AMBIGUOUS DUE TO LIMITED FIRST-PARTY QUERY DATA IN AI SEARCH. |

||

|

GOOGLE SEARCH CONSOLE PERFORMANCE REPORT, BING WEBMASTER TOOLS, ANALYTICS PLATFORMS, KEYWORD RESEARCH TOOLS (SEMRUSH, AHREFS, SE RANKING, SISTRIX, ETC.) |

AIO’S: “BLENDED” IN GOOGLE SEARCH CONSOLE PERFORMANCE REPORT, KEYWORD RESEARCH TOOLS (SEMRUSH, AHREFS, SE RANKING, SISTRIX, ETC.) VIA FILTERING OTHER LLMS: SIMILARWEB OFFERS PROMPTS DATA NOTE: THERE’S A NEED OF TOOLS THAT BETTER SUPPORT AUDIENCE SEARCH RESEARCH WITH LLMS PROMPTS INSIGHTS |

Optimization Areas

There’s a high overlap in the optimization principles for traditional search and LLMs, although with also important differences in a few key aspects:

|

CONTEXTUAL UNDERSTANDING & PERSONALIZATION |

TRADITIONAL SEARCH OFFERS “LIGHT” SEARCH RESULTS PERSONALIZATION BASED ON SEARCH HISTORY AND LOCATION. |

LLM CAN PROVIDE DEEP CONTEXTUAL PERSONALIZATION, LEVERAGING USER CONTEXT AND PERSONAL PREFERENCES OVER MULTIPLE TURNS. |

|

|

SEMANTIC RELEVANCE & CONTENT DEPTH |

YES. MODERN SEARCH ENGINES USE SEMANTIC UNDERSTANDING AND NATURAL LANGUAGE PROCESSING TO MATCH USER INTENT. INCORPORATING RELEVANT TOPICS AND SYNONYMS (NOT JUST EXACT KEYWORDS) HELPS CONTENT RANK. |

YES. GENERATIVE AI USES CONTEXT AND LANGUAGE UNDERSTANDING TO DETERMINE RELEVANCE. CONTENT THAT COMPREHENSIVELY COVERS A TOPIC -RATHER THAN TARGETING SPECIFIC QUERIES- IN NATURAL LANGUAGE IS MORE LIKELY TO BE USED BY AN LLM WHEN FORMING ANSWERS. |

|

|

ORIGINAL, EXPERT & UP-TO-DATE CONTENT |

YES. GOOGLE’S ALGORITHMS REWARD CONTENT WITH EEAT (EXPERIENCE, EXPERTISE, AUTHORITATIVENESS, TRUSTWORTHINESS). FRESH, HIGH-QUALITY INFORMATION TENDS TO RANK BETTER, ESPECIALLY FOR TIME-SENSITIVE QUERIES. |

YES. AI MODELS FAVOR CONTENT THAT DEMONSTRATES CLEAR EXPERTISE AND AUTHORITY. IF THE AI HAS ACCESS TO CURRENT DATA (E.G. VIA BING’S OR GOOGLE’S INDEX), FRESH INFORMATION CAN BE ESPECIALLY VALUABLE – BING’S TEAM NOTED THAT GENERATIVE AI “VALUES FRESH CONTENT” AS A REFERENCE CHECK AGAINST TRAINING DATA. REGULARLY UPDATING CONTENT HELPS ENSURE NEW INFO IS INCLUDED IN AI ANSWERS. |

|

|

AUTHORITY & POPULARITY SIGNALS |

YES. IN SEO, A STRONG BACKLINK PROFILE FROM DIVERSE, REPUTABLE SITES IS A MAJOR RANKING SIGNAL. HIGH-QUALITY BACKLINKS BOOST AUTHORITY AND VISIBILITY IN SEARCH RESULTS. |

YES, VIA MENTIONS / CITATIONS. LLMS DO NOT USE A LINK GRAPH OR PAGERANK IN THE TRADITIONAL SENSE. HOWEVER, CONTENT THAT IS WIDELY AND CONSISTENTLY REFERENCED OR DISCUSSED ACROSS AUTHORITATIVE SOURCES IS MORE LIKELY TO APPEAR IN THE MODEL’S TRAINING DATA OR BE RETRIEVED FOR AI ANSWERS. IF YOUR BRAND OR CONTENT EARNS MANY RELEVANT AND CONSISTENT MENTIONS ON REPUTABLE AND POPULAR PAGES (THOSE PAGES THEMSELVES HAVING MANY BACKLINKS), IT INCREASES THE CHANCE AN AI WILL HAVE “SEEN” YOUR CONTENT AND CONSIDER IT IN ITS RESPONSE. |

|

|

CONTENT FORMATTING / STRUCTURE |

YES. CLEAR ORGANIZATION (HEADINGS, PARAGRAPHS, LISTS, ETC.) HELPS SEARCH ENGINES AND USERS ALIKE. WELL-STRUCTURED CONTENT (E.G. USING DESCRIPTIVE H1–H2 HEADINGS, BULLET POINTS, TABLES) CAN EARN SEARCH FEATURES LIKE FEATURED SNIPPETS AND SATISFY USER NEEDS QUICKLY. |

YES. AI PREFERS WELL-STRUCTURED, EASY-TO-PARSE CONTENT FOR SYNTHESIS. CONTENT BROKEN INTO LOGICAL SECTIONS, WITH SUMMARIES OR BULLET POINTS, IS MORE LIKELY TO BE ACCURATELY EXTRACTED AND REPHRASED BY AN LLM. USING CUE PHRASES LIKE “IN SUMMARY,” OR PROVIDING CONCISE BULLET LISTS OF KEY POINTS CAN HELP AN AI IDENTIFY AND QUOTE THE RELEVANT INFORMATION EFFECTIVELY. |

|

|

MULTIMODAL CONTENT OPTIMIZATION (VIDEO, IMAGE, ETC.) |

YES. SEARCH ENGINES CAN RANK VIDEO, IMAGES, ETC. THROUGH SEARCH FEATURES IN THE MAIN TRADITIONAL SEARCH RESULTS AND VERTICAL SEARCH RESULTS WHEN RELEVANT. |

YES. VIDEO, IMAGES, ETC. CAN BE FEATURED IN LLMS ANSWERS WHEN IDENTIFIED TO BE RELEVANT TO SATISFY USER NEED. |

|

|

YES. ADDING SCHEMA (STRUCTURED DATA) HELPS SEARCH ENGINES INTERPRET YOUR CONTENT AND CAN TRIGGER RICH RESULTS. IT’S AN IMPORTANT TECHNICAL SEO TACTIC FOR ENHANCING VISIBILITY. |

YES, WHEN LLM-BASED SEARCH IS COMBINED WITH A LIVE INDEX OR KNOWLEDGE GRAPH (AS IN BING’S CHAT OR GOOGLE’S AI OVERVIEWS), STRUCTURED DATA CAN HELP. IN FACT, MICROSOFT CONFIRMED THAT SCHEMA MARKUP HELPS BING’S COPILOT (AI) UNDERSTAND YOUR CONTENT. STANDALONE LLMS WITHOUT LIVE DATA WON’T USE SCHEMA DIRECTLY, BUT SINCE BING/SGE DO, IT REMAINS VALUABLE. |

||

|

PAGE EXPERIENCE / CORE WEB VITALS |

YES. FAST LOADING, MOBILE-FRIENDLY, AND USER-FRIENDLY PAGES HAVE A BETTER CHANCE TO RANK (ALL ELSE BEING EQUAL). GOOGLE USES PAGE EXPERIENCE SIGNALS (LIKE LOADING SPEED, INTERACTIVITY, VISUAL STABILITY) AS TIEBREAKERS FOR RANKING, AND POOR UX CAN HURT SEO. |

YES, ALTHOUGH INDIRECTLY. AI MODELS WITH RAG SYSTEMS WILL USE PAGE EXPERIENCE INTO ACCOUNT. EXTREME PERFORMANCE ISSUES CAN CAUSE CRAWLABILITY ISSUES, NEGATIVELY IMPACTING INCLUSIONS IN AI ANSWERS. |

|

|

CRAWLABLE & INDEXABLE CONTENT |

YES. CONTENT MUST BE CRAWLABLE AND INDEXABLE TO RANK. MODERN SEARCH ENGINES LIKE GOOGLE AND BING SUPPORT CSR JS RENDERING IF CERTAIN BEST PRACTICES ARE FOLLOWED. |

YES. CONTENT NEEDS TO BE ACCESSIBLE FOR LLMS, WHETHER THROUGH THEIR TRAINING DATA OR INTEGRATED SEARH INDICES TO BE USED IN ANSWERS. ALTHOUGH THIS IS NOT AN PROBLEM FOR GOOGLE GEMINI / AI MODE, SOME LLMS LIKE CHATGPT HAVE BEEN IDENTIFIED IN TESTS* TO NOT SUPPORTING JAVASCRIPT RENDERING WHEN CRAWLING CONTENT, SO STRICTER LIMITATIONS SHOULD BE TAKEN INTO ACCOUNT. |

|

|

* TECHNICAL OPTIMIZATION: SEO CRAWLERS (SCREAMING FROG, SITEBULB, ETC.) AND SEO PLATFORMS OFFERING TECHNICAL OPTIMIZATION FEATURES (CRAWLABILITY, INDEXABILITY, STRUCTURED DATA, WEB SPEED, ETC)

* CONTENT OPTIMIZATION: CONTENT TOOLS (KEYWORD INSIGHTS, INLINKS, SURFER, CLEARSCOPE, FRASE, ETC) AND SEO PLATFORMS OFFERING CONTENT OPTIMIZATION FEATURES |

* TECHNICAL OPTIMIZATION: SEO CRAWLERS (SCREAMING FROG, SITEBULB, ETC.) AND SEO PLATFORMS OFFERING TECHNICAL OPTIMIZATION FEATURES (CRAWLABILITY, INDEXABILITY, STRUCTURED DATA, WEB SPEED, ETC)

* CONTENT OPTIMIZATION: CONTENT TOOLS (KEYWORD INSIGHTS, INLINKS, SURFER, CLEARSCOPE, FRASE, ETC) ALTHOUGH THERE’S A NEED OF NEW CONTENT OPTIMIZATION TOOLS THAT TAKE THE QUERY FAN-OUT TECHNIQUE INTO ACCOUNT |

Results Presentation & Delivery

The result presentation and their delivery is the other area where the bigger discrepancies can be found:

|

A LIST OF LINKS (THE “10 BLUE LINKS”) WITH SNIPPETS, PLUS RICH RESULTS LIKE FEATURED SNIPPETS, KNOWLEDGE PANELS, IMAGES, ETC. THE USER IS PRESENTED WITH MULTIPLE OPTIONS AND SOURCES TO CHOOSE FROM. |

A SYNTHESIZED ANSWER OR OVERVIEW. THE AI COMPILES INFORMATION FROM ONE OR MULTIPLE SOURCES INTO A SINGLE CONVERSATIONAL RESPONSE. THE RESULT IS DIRECTLY PROVIDED TEXT (OFTEN A FEW PARAGRAPHS) ADDRESSING THE QUERY. IN SOME IMPLEMENTATIONS (E.G. BING CHAT, GOOGLE’S AI OVERVIEW), THE ANSWER MAY INCLUDE CITATIONS OR REFERENCE LINKS, BUT THE FOCUS IS ON THE COMBINED ANSWER, NOT DISCRETE SITE LISTINGS. |

||

|

ATTRIBUTION AND CITATIONS |

CLEAR ATTRIBUTION BY DESIGN – EACH RESULT IS EXPLICITLY TIED TO A SOURCE (A WEB PAGE), SHOWN BY THE TITLE, URL, AND DESCRIPTION. FEATURED SNIPPETS DO SHOW CONTENT FROM A SITE, WITH THE URL LISTED, AND USERS INHERENTLY KNOW WHICH SITE THEY’RE CLICKING ON. |

MIXED ATTRIBUTION. THE AI’S ANSWER MIGHT CITE SOURCES VIA FOOTNOTES OR SUBTLE LINKS THAT ARE SHOWN IN SIDEBAR WHEN LINK ICONS ARE CLICKED ON ANSWERS, BUT ATTRIBUTION IS OPAQUE COMPARED TO TRADITIONAL SEARCH. FOR EXAMPLE, GOOGLE’S AI OVERVIEWS MOSTLY CITES SOURCES VIA A LINKS SIDEBAR. HOWEVER, USERS MIGHT NOT NOTICE OR CLICK THESE AS MUCH AS THEY WOULD IN TRADITIONAL SERPS DUE TO LACK OF VISIBILITY. IT’S ALSO IMPORTANT TO NOTE HOW LLMS FIND CITATIONS CHALLENGING WITHOUT A GROUNDING PROCESS, SO ALTHOUGH LLMS WITH THEM MIGHT INCORPORATE THEM FURTHER (LIKE LLMS WITH SEARCH INTEGRATION LIKE AIO’S, AI MODE, ETC.) THOSE WITHOUT IT MIGHT CONSISTENTLY STRUGGLE TO SHOW THEM. |

|

|

RESULTS DIVERSITY, TRUST AND VERIFICATION |

USERS CAN COMPARE MULTIPLE RESULTS. IF ONE SITE’S INFO SEEMS QUESTIONABLE, THE USER CAN READ ANOTHER PERSPECTIVE. THERE IS INHERENT CROSS-VERIFICATION BY VIRTUE OF MANY RESULTS. MOREOVER, EACH SITE’S REPUTATION (DOMAIN NAME RECOGNITION, REVIEWS, ETC.) CAN INFLUENCE USER TRUST BEFORE CLICKING. |

USERS MIGHT TAKE THE AI’S ANSWER AT FACE VALUE. IF THE AI MAKES A MISTAKE OR PULLS IN INCORRECT INFO, THE USER MIGHT NOT IMMEDIATELY HAVE ALTERNATE ANSWERS VISIBLE (UNLESS THEY SPECIFICALLY LOOK AT CITATIONS OR ASK THE AI FOR CLARIFICATION). THIS PUTS ONUS ON CONTENT ACCURACY – IF YOUR CONTENT IS AUTHORITATIVE AND CORRECT, ENSURING IT’S WHAT THE AI PULLS CAN PREVENT MISINFORMATION. CONVERSELY, ANY INACCURACIES ON YOUR SITE COULD BE SPREAD BY THE AI. BUILDING TRUST IN THE AI ERA MEANS PRODUCING CONTENT THAT THE MODELS CONSISTENTLY FIND CREDIBLE. |

|

|

THE EXPECTATION IS THAT THE USER WILL CLICK A RESULT TO GET THE FULL ANSWER OR COMPLETE A TASK (HENCE DRIVING TRAFFIC TO WEBSITES). EVEN FEATURED SNIPPETS THAT GIVE A QUICK ANSWER USUALLY ATTRACT A CLICK FOR MORE DETAIL OR CONFIRMATION. CLICK-THROUGH RATE (CTR) IS A CORE METRIC – RANKING HIGHER IS VALUABLE BECAUSE IT EARNS MORE CLICKS. |

ANSWER MAY FULLY SATISFY THE USER QUERY WITHIN THE AI INTERFACE, REDUCING CITED SITES VISITS. THIS IS A “ZERO-CLICK SEARCH” SCENARIO. AS A RESULT, ORGANIC CTR DROPS – A USER MIGHT READ THE AI’S SUMMARY AND NOT VISIT ANY SITE. FOR SEOS, THIS MEANS SUCCESS ISN’T JUST ABOUT CLICKS, BUT ALSO ABOUT BEING INCLUDED IN THE ANSWER AND BRAND VISIBILITY. THE CONCEPT OF “POSITION #1” IS REPLACED BY BEING ONE OF THE FEW SOURCES AN AI CHOOSES TO DRAW FROM. |

||

|

SEARCH RANK TRACKERS, ANALYTICS PLATFORMS |

LLMS VISIBILITY TRACKERS (PROFOUND, PEEC.AI, ETC.), ANALYTICS PLATFORMS, SEO PLATFORMS WITH AI FEATURES / PLATFORMS TRACKING (SIMILARWEB, SEMRUSH, ETC.) |

Goals and Metrics

In the case of goals and metrics, the differences are mainly based on the type of goals that should be followed based on the user behavior characteristics of the two platform types and their specific interfaces, however, at a high level, we will want to track similar KPIs, but expect different outcomes:

|

SITE CONTENT CRAWLABILITY & INDEXABILITY |

YES. ENSURING YOUR SITE IS CRAWLED AND INDEXED BY SEARCH ENGINES IS FUNDAMENTAL – IF YOU’RE NOT INDEXED, YOU CAN’T RANK. |

YES. LLMS AND SEARCH BOTS. CONTENT NEEDS TO BE INGESTED BY AI SYSTEMS AS WELL – WHETHER VIA SEARCH ENGINE INDEXES (FOR AI OVERVIEWS) OR LLM TRAINING DATA. BOTH SEARCH BOTS AND NEW AI CRAWLERS SHOULD BE ABLE TO DISCOVER YOUR CONTENT. GIVEN SOME LLMS DON’T RENDER CSR JS -AS TRADITIONAL SEARCH ONES MAJORLY SUPPORT-, IT’S IMPORTANT TO VALIDATE THE SITE CRAWLABILITY BASED ON THE LLM BOT, WHILE AVOIDING CSR JS RELIANCE FOR CORE CONTENT TO ENSURE INDEXABILITY ACROSS LLMS. |

|

|

YES. THROUGH TOP POSITIONS / INCLUSIONS OF SITE IN SERPS IN ORGANIC SEARCH AND SERP FEATURES FOR RELEVANT BRANDED AND NON-BRANDED QUERIES THROUGH THE USER SEARCH JOURNEY. |

YES. ACHIEVED BY BEING MENTIONED OR CITED WITHIN AI-GENERATED ANSWERS FOR RELEVANT QUERIES. YOUR BRAND MIGHT ALSO APPEAR AS A SOURCE LINK. EVEN IF USERS DON’T CLICK, THIS IMPRESSION HAS VALUE. OVER TIME, WE MAY MEASURE HOW FREQUENTLY A BRAND IS SURFACED IN AI ANSWERS AS AN EQUIVALENT TO SHARE-OF-VOICE. |

||

|

BRAND AUTHORITY / PROMINENCE |

YES. THROUGH SITE NUMBER/SHARE OF BACKLINKS AND THIRD-PARTY BRAND MENTIONS. |

YES. THROUGH NUMBER/SHARE OF BRAND MENTIONS IN AI ANSWER SOURCES. |

|

|

YES. SENTIMENT OF TOP RANKED PAGES FOR RELEVANT QUERIES ABOUT THE BRAND |

YES. SENTIMENT AND TONE OF AI ANSWERS AND CITED SOURCES ABOUT THE BRAND |

||

|

YES, ALTHOUGH IMPRESSIONS FROM SEARCH ARE MAINLY TRACKED AS A WAY TO ASSESS AND OPTIMIZE CTR, RATHER THAN AN A PRIMARY GOAL. |

YES, MEASURING IMPRESSIONS IN LLMS ANSWERS (EVEN WITHOUT A DIRECT LINK GENERATING TRAFFIC) IS ONE OF THE METRICS TO TRACK AND ASSESS TO UNDERSTAND BRAND VISIBILITY AND REACH. |

||

|

YES. ORGANIC TRAFFIC FROM SEARCH IS A PRIMARY GOAL AND KPI. GROWTH IN SESSIONS/PAGEVIEWS FROM SEO IS TRACKED AND TIED TO ROI. EACH RANKING IMPROVEMENT CAN LEAD TO MORE CLICKS AND HENCE MORE TRAFFIC. |

YES. TRAFFIC FROM AI SEARCH MIGHT COME IN THE FORM OF REFERRAL VISITS. THIS IS EXPECTED TO BE LOWER VOLUME PER QUERY COMPARED TO CLASSIC GOOGLE SEARCHES, BECAUSE OF THE ZERO-CLICK PHENOMENON. HOWEVER, IT’S EXPECTED TO BE HIGHLY TARGETED AND ENGAGED TRAFFIC (THE USER CLICKED SPECIFICALLY FOR MORE DETAIL). |

||

|

YES. CONVERSIONS/LEADS FROM ORGANIC SEARCH TRAFFIC FROM SERPS (NUMBER, REVENUE, CONVERSION RATE, ETC.) |

YES. CONVERSIONS/LEADS FROM LLM TRAFFIC (NUMBER, REVENUE, CONVERSION RATE, ETC.) |

||

|

SEARCH RANK TRACKERS, ANALYTICS PLATFORMS |

LLMS VISIBILITY TRACKERS (PROFOUND, PEEC.AI, ETC.), ANALYTICS PLATFORMS, SEO PLATFORMS WITH AI FEATURES / PLATFORMS TRACKING (SIMILARWEB, SEMRUSH, ETC.) |

TL;DR

There’s definitely an overlap between the optimization pillars between LLMs and traditional search engines, although there are also important differences that need to be taken into account when establishing an strategy for each, such as the level of personalization or the need to optimize for the context -rather than queries- with LLMs due to the query fan-out technique.

This is influenced also by the actual major differences in the user behavior -along with the current limitation in access of information for such data, like search popularity of queries in LLMs- and the results presentation and delivery, and hence the type of content that it will make sense to prioritize and focus for one or the other, as well as the different type of goals that we seek to achieve when doing it so.

I expect that with the rapid adoption of LLMs with the further integration and expansion of AI Mode, as well as more access to first and third party data that facilitate us their analysis-, we should be able to start putting in practice dedicated strategies to maximize visibility and conversions that make sense in each of these search platforms.

Sources

The insights/data mentioned above are from:

Special thank you’s

To Darth Autocrat, Chris Green and Gianluca Fiorelli for his review and feedback of this piece.

Source link